INTRODUCTION

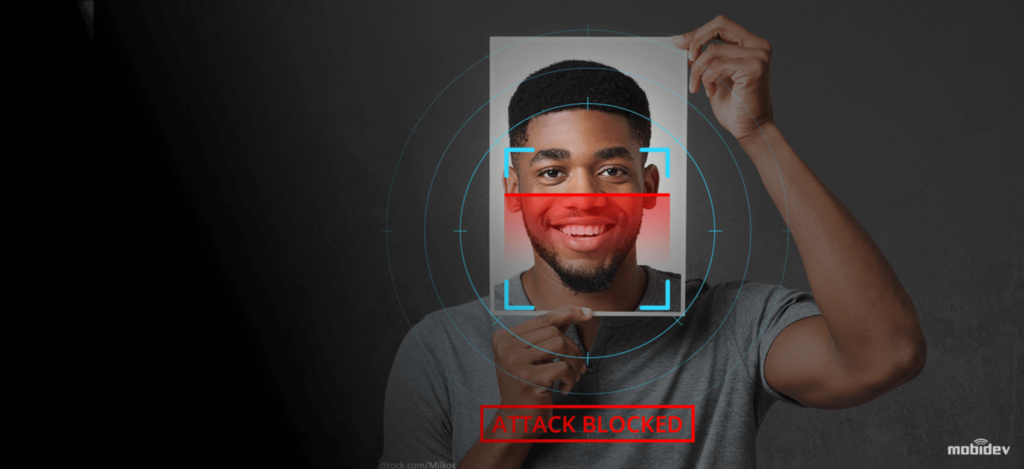

Nowadays, it is known that most of the existing face recognition systems are vulnerable

to spoofing attacks. A spoofing attack occurs when someone tries to bypass a face

biometric system by presenting a fake face in front of the camera. Here we use

Image quality assessment method for detecting face spoofing.

BACKGROUND

In recent years, the increasing interest in the evaluation of biometric systems

security has led to the creation of numerous and very diverse initiatives focused on

this major field of research the publication of many research works disclosing and

evaluating different biometric vulnerabilities the proposal of new protection

methods related book chapters the publication of several standards in the area the

dedication of specific tracks, sessions, and workshops in biometric-specific and

general signal processing conferences the organization of competitions focused on

vulnerability assessment the acquisition of specific datasets the creation of groups

and laboratories specialized in the evaluation of biometric security or the existence

of several European Projects with the biometric security topic as the main research

interest. All these initiatives clearly highlight the importance given by all parties

involved in the development of biometrics (i.e., researchers, developers and

industry) to the improvement of the security of the system to bring this rapidly emerging

technology into practical use.

OBJECTIVES

In the present work we propose a novel software-based multi-biometric and multi-

attack protection method which targets to overcome part of these limitations

through the use of image quality assessment (IQA). It is not only capable of operating with very good performance under different biometric systems (multi-

biometric) and for diverse spoofing scenarios, but it also provides a very good level

of protection against certain non-spoofing attacks (multi-attack). Moreover, being

software-based, it presents the usual advantages of this type of approaches: fast, as

it only needs one image (i.e., the same sample acquired for biometric recognition)

to detect whether it is real or fake; non-intrusive; user-friendly (transparent to the

user); cheap and easy to embed in already functional systems (as no new piece of

hardware is required). An added advantage of the proposed technique is its speed

and very low complexity, which makes it very well suited to operate in real

scenarios (one of the desired characteristics of this type of method). As it does not

deploy any trait-specific property (e.g., minutiae points, iris position, or face

detection), the computation load needed for image processing purposes is very

reduced, using only general image quality measures fast to compute, combined with

very simple classifiers.

SYSTEM ANALYSIS

There are many existing systems used to face spoofing detection. But they have

some limitations. This section includes a detailed study of the existing systems,

Literature survey. Also given brief details about the proposed system.

2.1 EXISTING SYSTEM

Despite a lot of advancements in face recognition systems, face

spoofing still poses a serious threat. Most of the existing academic and commercial

facial recognition systems may be spoofed (i) a photo of a genuine user; (ii) a video

of a genuine user; (iii) a 3D face model (mask) of a genuine user; (iv) a reverse-

engineered face image from the template of a genuine user; (v) a sketch of a genuine

user; (vi) an impostor wearing specific make-up to look like a genuine user; (vii) an

an impostor who underwent plastic surgery to look like a genuine user. The most

The easiest, cheapest, and common face spoofing attack is to submit a photograph of a

legitimate user to the face recognition systems, which is also known as “photo

attack.”

Typical countermeasure techniques can be coarsely classified into three

categories based on clues used for spoof attack detection: (i) motion analysis based

methods, (ii) texture analysis based methods, and (iii) hardware-based methods. In

what follows, we provide a brief literature overview of published face spoof

recognition techniques along with their pros and cons.

(i) Motion Analysis Based Methods. These methods broadly try to detect

spontaneous movement clues generated when two-dimensional counterfeits are

presented to the camera of the system, such as photographs or videos. Therefore,

exploited the fact that human eye-blink occurs once every 2–4 seconds and

proposed eye-blink based liveness detection for photo-spoofing using eye-

blinks. This method uses an undirected conditional random field framework to model the eye-blinking, which relaxes the independence assumption of generative

modeling and states dependence limitations from hidden Markov modeling. It is

evident that real human faces will move significantly differently from planer

objects, and such deformation patterns can be employed for liveness detection. For

example, considered Lambertian reflectance model with difference-of-Gaussians

(DoG) to derive differences of motion deformation patterns between 2D face photos

presented during spoofing attacks and 3D live faces. It does so by extracting the

features in both scenarios using a variational retinex-based method and difference-

of-Gaussians based approach. Then, the features are used for live or spoof

classification. Reported experiments showed promising results on a dataset

consisting of real accesses and spoofing attacks to 15 clients using photo-quality

and laser-quality prints. Kollreider proposed a liveness detection approach based on

a short sequence of images using a binary detector, which captures and tracts the

subtle movements of different selected facial parts using a simplified optical flow

analysis followed by a heuristic classifier. The same authors also presented a

method to combine scores from different expert systems, which concurrently

observe the 3D face motion approach introduced in the former work as liveness

attributes like eye-blinks or mouth movements. In a similar fashion, also used

optical flow to estimate motion for detecting attacks produced with planar media

such as prints or screens. Since the frequency of facial motion is restricted by the

human physiological rhythm, thus motion-based methods take a relatively long time

to accumulate stable vitality features for face spoof detection. Moreover, they may

be circumvented or confused by other motions, for example, background motion in

the video attacks.

(ii) Texture Analysis Based Methods. This kind of method examines the

skin properties, such as skin texture and skin reflectance, under the assumption that

surface properties of real faces and prints, for example, pigments, are different.

Examples of detectable texture patterns due to artifacts are printing failures or

blurring. We described a method for print-attack face spoofing by exploiting

differences in the 2D Fourier spectra of live and spoof images. The method assumes

that photographs are normally smaller in size and contain fewer high-frequency components compared to real faces. The method only works well for downsampled

photos of the attacked identity but likely fails for higher-quality samples. They

developed microtexture analysis based methods to detect printed photo attacks. One

limitation of the presented methods is the requirement of a reasonably sharp input image.

(iii) Hardware-Based Methods. Few interesting hardware-based face

antispoofing techniques have been proposed so far based on imaging technology

outside the visual spectrum, such as 3D depth, complimentary infrared (CIR), or

near-infrared (NIR) images by comparing the reflectance information of real faces

and spoof materials using a specific set-up of LEDs and photodiodes at two different

wavelengths. Preliminary efforts on thermal imaging for face liveness detection

have also been exploited, including the acquisition of a large database of thermal face

images for real and spoofed access attempts. Besides, a number of researchers have

explored multimodality as antispoofing techniques for face spoofing attacks. They

have mainly considered the combination of face and voice by utilizing the

correlation between the lips movement and the speech being produced, where a

microphone and a speech analyzer were required. Similarly, challenge-response

strategy considering voluntary eye-blinking and mouth movement following a

request from the system has been studied. Though hardware-based methods

provide better results and performances, they require an extra piece of hardware which

increases the cost of the system

OVERALL ALGORITHM

Step 1: Start

Step 2 : Images are collected using a high-resolution camera

Step 3 : Gives label to each image as spoofed and original

Step 4 : Training dataset is created using collected images

Step 5 : All the images are given to preprocessing

Step 6 : Some features are extracted from each image

Step 7 : A machine learning model is created

Step 8 : New data is given to the model for testing

Step 9 : Repeat step 5 & 6 for new data

Step 10 : Using SVM classifies the new data as spoofed or original

SOURCE CODE

This project includes training, testing, and classification using SVM. Source code

for these are provided here.

6.1 FEATURE EXTRACTION

void training()

{

DatabaseReader dr;

dr.readTrainingFiles();

std::vector labels= dr.getTrainLabels();

std::vectortrainingFileNames = dr.getTrainFileNames();

Mat inputImage,greyImage,blurImage;

Mat training data;

Mat trainingLabels;

cout << “size =” << trainingFileNames.size()<featureVector;

double

MSE,PSNR,SNR,SC,MD,AD,NAE,RAMD,LMSE,NXC,MAS,MAMS,TED,TCD

,SME,SPE,GME,GPE,HLFI;

double

sum=0,ifference=0,sumOfSquresG=0,sumOfSquresB=0,absDiffrence=0,sumForA

D=0,sumForNAE=0,sumForG=0,sumOfProductsNXC=0,sumForTED=0;

double

angle=0,sumForMAC=0,sumForMAMS=0,PI=3.14159265,sumForRAMD=0;

int ROW,COL,greyIntensity,blurIntensity,min=0,maxValue=0;

int temp=0;

vector diffrenceVector,absDiffrenceVector;

int intense1,intense2,intense3,intense4;

double sumForLMSE=0,sumForLMSEG=0,differenceLMSE;

Mat edgeGrey,edgeBlur;

int edgeGintensity,edgeBintensity;

ROW=blurImage.rows;

COL=blurImage.cols;

Mat

Ghij(ROW,COL,CV_8UC1,Scalar(0)),Bhij(ROW,COL,CV_8UC1,Scalar(0));

//Feature calculations

//1.Mean Squre Error

for(int row=0;row(row,col);

blurIntensity=blurImage.at(row,col);

// 1.MSE

ifference=(greyIntensity-blurIntensity);

diffrenceVector.push_back(ifference);

sum=sum+(ifferenceifference); DEPARTMENT OF COMPUTER SCIENCE 24 THEJUS ENGINEERING COLLEGE FACE SPOOFING DETECTION PROJECT REPORT 2018-19 // 3.SNR sumOfSquresG=sumOfSquresG+(greyIntensitygreyIntensity);

// 4.SC

sumOfSquresB=sumOfSquresB+(blurIntensityblurIntensity); // 7.NAE absDiffrence=abs(greyIntensity-blurIntensity); absDiffrenceVector.push_back(absDiffrence); sumForNAE=sumForNAE+absDiffrence; sumForG=sumForG+greyIntensity; //10.NXC sumOfProductsNXC=sumOfProductsNXC+(greyIntensityblurIntensity);

//11,12 MAS.MAMS

double

intermediate=(acos((greyIntensity+blurIntensity)/(greyIntensityblurIntensity))); angle=((2/PI)intermediate);

sumForMAC=sumForMAC+angle;

sumForMAMS=(1-(abs(1-angle)(1-(abs(greyIntensity- blurIntensity)/255)))); } } MSE=(sum/(COLROW));

PSNR=(10.0log((255255)/MSE));

SNR=10.0log(sumOfSquresG/(ROWCOLMSE)); SC=sumOfSquresG/sumOfSquresB; for(int index=0;indexmaxValue) { maxValue=min; } } MD=maxValue; for(int index=0;indexCOL);

NAE=sumForNAE/sumForG;

for(int index=0;index< absDiffrenceVector.size();index++) { for(int index2=index+1;index2(row-1,col);

intense2=greyImage.at(row+1,col);

intense3=greyImage.at(row,col+1);

intense4=greyImage.at(row,col+1);

Ghij.at(row,col)=intense1+intense2+intense3+intense4;

sumForLMSEG=sumForLMSEG+(Ghij.at(row,col)Ghij.at(row, col)); //for Bhij intense1=blurImage.at(row-1,col); intense2=blurImage.at(row+1,col); intense3=blurImage.at(row,col+1); intense4=blurImage.at(row,col+1); Bhij.at(row,col)=intense1+intense2+intense3+intense4; differenceLMSE=(Ghij.at(row,col)-Bhij.at(row,col)); sumForLMSE=sumForLMSE+(differenceLMSEdifferenceLMSE);

}

}

LMSE=sumForLMSE/sumForLMSEG;

NXC=sumOfProductsNXC/sumOfSquresG;

MAS=1-((sumForMAC/ROWCOL)); MAMS=(1/(ROWCOL))sumForMAMS; Sobel(greyImage,edgeGrey,-1,1,1,3); Sobel(blurImage,edgeBlur,-1,1,1,3); for(int row=0;row(row,col); edgeBintensity=edgeBlur.at(row,col); sumForTED=sumForTED+abs(edgeGintensity-edgeBintensity); } } TED=((1/(ROWCOL))*sumForTED);

DFT calculate;

Mat mFg,mFb,aFg,aFb;

calculate.fourierTransform(greyImage,mFg,aFg);

calculate.fourierTransform(blurImage,mFb,aFb);

featureVector.push_back(MSE);

featureVector.push_back(PSNR);

featureVector.push_back(SNR);

featureVector.push_back(SC);

featureVector.push_back(MD);

featureVector.push_back(AD);

featureVector.push_back(NAE);

featureVector.push_back(RAMD);

featureVector.push_back(LMSE);

featureVector.push_back(NXC);

featureVector.push_back(MAS);

featureVector.push_back(TED);

cout<<”MSE=”<<MSE<<endl;

cout<<”PSNR=”<<PSNR<<endl;

cout<<”SNR=”<<SNR<<endl;

cout<<”SC=”<<SC<<endl;

cout<<”MD=”<<MD<<endl;

cout<<”AD=”<<AD<<endl;

cout<<”NAE=”<<NAE<<endl;

cout<<”RAMD=”<<RAMD<<endl;

cout<<”LMSE=”<<LMSE<<endl;

cout<<”NXC=”<<NXC<<endl;

cout<<”MAS=”<<MAS<<endl;

cout<<”TED=”<<TED<<endl;

Mat vec = Mat(featureVector);

vec=vec.reshape(0,1);

trainingData.push_back(vec);

trainingLabels.push_back(labels[index]);

}

SupportVectorMachine svm;

svm.training(trainingData ,trainingLabels);;

}

ADVANTAGES

➢ Good Performance

➢ More Secure

➢ Fast

➢ User-friendly

➢ Cheap and easy

➢ Low complexity

DISADVANTAGES

➢ Different light setting affect the spoofing detection

➢ Quality of camera also affects the accuracy of result